Mixup, Manifold Mixup and CutMix

What will we do ?

In this post, we will see three data augmentation techniques: MixUp, Manifold MixUp and Cutmix. And how to implement them in fastai2.

To implement them in fastai2, we'll use callbacks.

Callbacks are what is used inside fastai to inject custom behavior in the training loop (we use 'cbs=' argument inside the Learner).

Note that these techniques require far more epochs to train.

Data augmentation

Data augmentation is a technique used to enrich your dataset, by creating synthetic data generated from your original data.

This is used as a regularization technique, in other means your model will generalize better to new data that he has not seen before.

DNN have a problem, they predict overconfidently stuff, so with regularization we have better genralization and smoother decision boundary (transition from one class to the other).

The three techniques that we'll see provide an additional benefit that is your model will get more robust to corrupted labels and adverserial attacks.

Adverserial attacks are noise added to your input, inperceptible by the human eye but will confuse your model to producing totally different predictions from the ground truth labels.

1. Mixup

The idea is pretty basic :

- Select two samples (images in our case) at random from the dataset.

- Select a weight at random (from the Beta distribution where alpha is a hyperparameter, i.e. λ ∼ Beta(α, α)).

- Take a weighted average of the two samples previously chosen using that weight, this is your independent variable.

- Take a weighted average of the targets of your corresponding images, with the same weight this will be your dependant variable.

Then you continue the usual procedure for training your DNN.

Your targets must be one-hot-encoded.

The related paper:mixup: Beyond Empirical Risk Minimization

Here's the code implementation of it as described in the paper.

# Take two samples at a time

for (x1, y1), (x2, y2) in zip(loader1, loader2):

# Select a random weight

lam = numpy.random.beta(alpha, alpha)

# Take the weighted average of the two samples, to obtain your independent variable

x = Variable(lam * x1 + (1. - lam) * x2)

# Take the weighted average of the targets, to obtain your dependent variable

y = Variable(lam * y1 + (1. - lam) * y2)

# Continue training as usual

optimizer.zero_grad()

loss(net(x), y).backward()

optimizer.step()

MixUp is already available as a Callback in fastai2.

# Import callback

from fastai.callback.mixup import *

# Create model

learn_with_mixup = Learner(dls, resnet18(), metrics=accuracy, cbs = MixUp(0.2))

# Train model

learn_with_mixup.fit_one_cycle(5)

Now let's see the remaining two techniques, which are just variants of this one.

2. Manifold mixup

It's the same idea as MixUp but instead of just applying it for the input (i.e. before the first layer), we can apply this mixing before another layer of the model.

So the procedure goes, as described in the paper :

- we select a random layer k from a set of eligible layers S in the neural network. This set may include the input layer.

- we process two random data minibatches (x1, y1) and (x2, y2) as usual, until reaching layer k. This provides us with two intermediate minibatches (result_1, y1) and (result_2, y2).

- we perform MixUp on these intermediate minibatches. This produces the mixed minibatch (mixed_result, mixed_y).

- we continue the forward pass in the network from layer k until the output using the mixed minibatchs.

- this output is used to compute the loss value and

gradients that update all the parameters of the neural network.

The related paper:Manifold Mixup: Better Representations by Interpolating Hidden States

Here's a pseudo-code to understand the implementation.

# Creating a simple network

first_layer = nn.Linear(20, 10)

second_layer = nn.Relu()

third_layer = nn.Linear(10, 1)

# Pick eligible layers before training

eligible = first_layer, third_layer

# Take two samples at a time

for (x1, y1), (x2, y2) in zip(loader1, loader2):

# Choose a layer at random (in the paper it was from a uniform distribution)

chosen = choose_random(eligile)

# Let's say chosen == third_layer

# Forward pass through first and second layer

result_xb1 = second_layer(first_layer(xb1))

result_xb2 = second_layer(first_layer(xb2))

# Select a random weight

lam = numpy.random.beta(alpha, alpha)

# Take the weighted average of the two intermediate results

new_result = result_xb1 * lam + result_xb2 * (1 - lam)

# Take the weighted average of the targets, to obtain your dependent variable

y = yb1 * lam + yb2 * (1 - lam)

# Continue forward pass with the new_result

pred = third_layer(new_result)

# Continue training as usual

optimizer.zero_grad()

loss(pred, y).backward()

optimizer.step()

Now this one is not availble in fastai2, but no worries there is a module created by a guy called Nestor Demeure from the fastai community who implemented the manifold mixup as a Callback for us to use in fastai2.

Github repo of the module : ManifoldMixupV2

# Clone the repo

!git clone https://github.com/nestordemeure/ManifoldMixupV2.git

# Copy the module in the current directory

!cp /content/ManifoldMixupV2/manifold_mixup.py /content/

# Import the module

from manifold_mixup import *

# Create the model

learn_with_Mmixup = Learner(dls, resnet18(), metrics=accuracy, cbs=ManifoldMixup(0.2))

# Train the model

learn_with_Mmixup.fit_one_cycle(5)

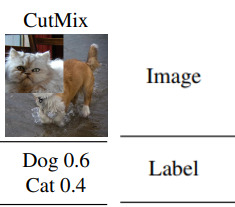

3. Cutmix

Finally the most recent of the three, which again follows the same procedure as MixUp (is applied to input only) but instead of mixing the random samples, we cut a portion of one sample and patch it into the other, this will be your independent variable.

Now for your dependent variable, you take the propotion of each sample appearing in the new one created.

Here's an image from the paper that will help you understand.

The related paper:CutMix: Regularization Strategy to Train Strong Classifiers with Localizable Features

CutMix is also availble as a Callback in fastai2.

# Import callback

from fastai.callback.cutmix import *

# Create the model

learn_cut_mix = Learner(dls, resnet18(), metrics=accuracy, cbs=CutMix(2.0))

# Train the model

learn_cut_mix.fit_one_cycle(5)